CLIFER: Continual Learning with Imagination for Facial Expression Recognition

Super Short Description

- Paper Link

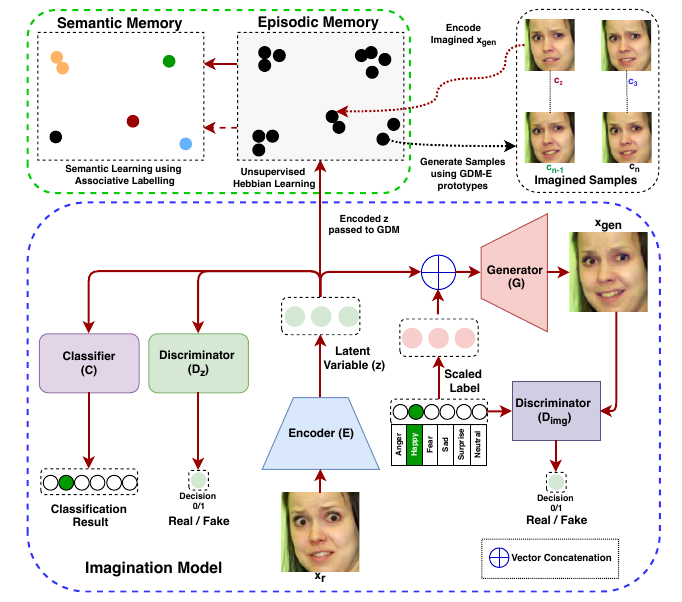

- This paper works on facial expression recognition (6 expressions. For example: anger, happiness etc). Its novelty lies in its Imagination Model: generating new images of same person with different facial expressions from the orignal image. Another interesting portion is the integration of this Imagination model as a data augmentation module to the main model, the GDM module.

A Short Background

- FER : Facial expression recognition

- Dual memory: Episodic and semantic.

- Episodic memory example: David Beckham smiling face in last video. This memory should change pretty rapidly.

- Semantic memory example: A typical european smiling face. This memory should change relatively slowly.

- Continual Learning (CL): Methodology with which the model should improve on getting incremental amount of data with time. Similar to online learning in spirit.

- GDM module: A Continual learning model. Implements the human inspired dual memory system using artifical neurons.

Understanding the Paper

Imagination Model

Model is adapted from ExprGAN. It has two discriminators: \(D_z\) to regularize the latent space embedding and \(D_{img}\) to regularize the generated images. \(D_z\) ensures that latent space embedding of generated images is similar to original image embeddings. This helps to reduce distorting in generated images. \(D_{img}\) ensures that generated images actually have the intended face expressions.

How does GDM Module Ensure Rapid Non-Overlapping Learning in Episodic Memory

- Non overlapping means that each embedding is attempted to get stored as they are. Tendency to encode the common features across embeddings of same face expression type is discouraged.

- Learning rate is high.

- Its objective is to retain the input to a high degree of precision. It is made quite eager to add new neurons.

How does GDM Module Ensure Slow Overlapping Learning in Semantic Memory

- Learning rate is kept low.

- It has a relatively higher bar for adding a new neurons.

- This model takes as input best representations of face latent embeddings from the episodic memory. It uses mode for fixing the feature prototypes for different expression types.